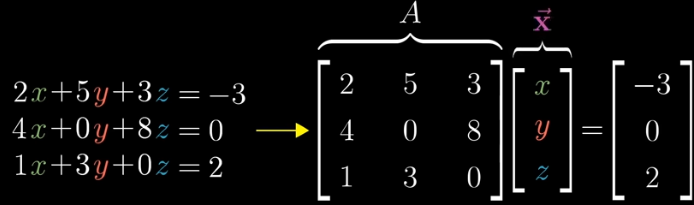

Linear Systems

Linear Algebra helps us to manipulate matrices, and one of the most important topics / use cases of this are systems of linear equations

So in here we can see that is a transformation, and is a vector we want to find, such that when we transform we get to our desired output

So knowing this, if we have our output and a Transformation , we could hypothetically find the Inverse , which should give us our desired vector

- This means we can analytically solve for this, however this solution is unique if and only if , because if there are multiple solutions that can "squeeze" things down by a dimension. Another thought is "there's no inverse to map 1D to a unique 2D plane", we can't just "create" another dimension

- This solution is only unique because it means "if you first apply , and then apply , you end up where you started"

- There still may be a solution though...a solution is unique iff it's , but does not imply there is no solution

Rank

- When the output of a transformation is a line, we say the Rank is 1

- If all output vectors land in 2D plane, the Rank is 2

- Therefore, Rank can be thought of as the number of dimensions in the output of the Transformation

- matrix with means nothing has collapsed, but matrix with the same Rank means something / some group of vectors or points has collapsed